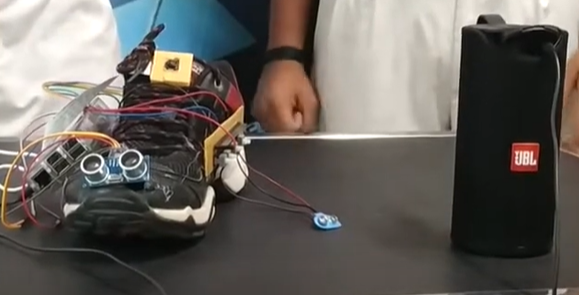

Blind Shoes project focuses on creating an assistive device to aid individuals who are both deaf and blind. The device leverages various sensors and modules, integrated with a Raspberry Pi, to provide real-time navigation assistance and emergency communication. The system is designed to enhance the user’s independence and safety by offering haptic and auditory feedback based on the selected mode and sending emergency alerts when needed.

Components Required

- Raspberry Pi (with Internet connection)

- Camera Module

- Ultrasonic Sensor (HC-SR04)

- GPS Module

- Vibro Motors (2 units)

- Speaker

- Buttons (4 units)

- Resistors (4 units, 10kΩ each)

- Breadboard and Jumper Wires

- Power Supply

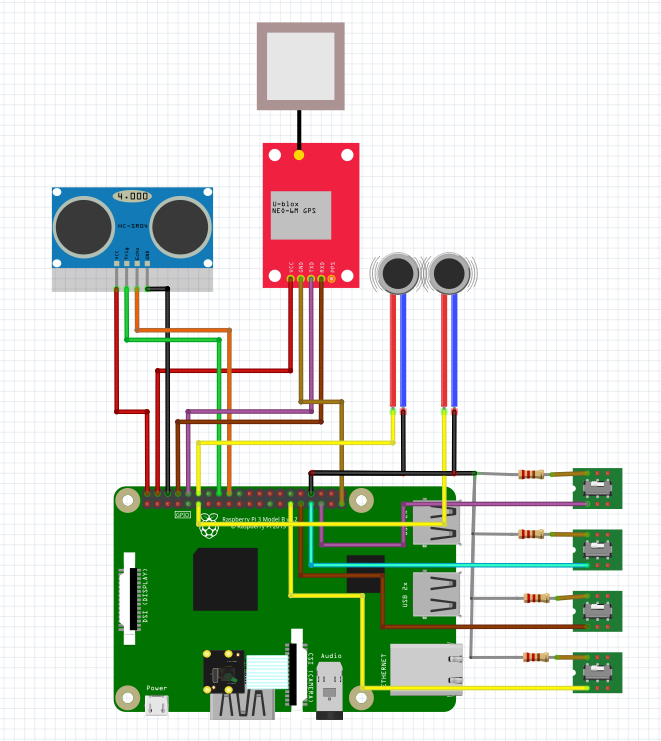

Circuit Connection

- Camera Module:

- Connect the camera module to the dedicated camera interface on the Raspberry Pi.

- Ultrasonic Sensor:

- VCC to 5V

- GND to GND

- Trig to GPIO pin 23

- Echo to GPIO pin 24

- GPS Module:

- VCC to 3.3V or 5V (depending on your module)

- GND to GND

- TX to GPIO pin 15 (UART RX)

- RX to GPIO pin 14 (UART TX)

- Vibro Motors:

- Connect the first motor to GPIO pin 17 and GND

- Connect the second motor to GPIO pin 18 and GND

- Speaker:

- Connect to an audio output pin (e.g., through a 3.5mm jack or USB sound card if your Pi doesn’t have an audio jack)

- Buttons:

- Button 1: Connect to GPIO pin 4 and GND (with a pull-down resistor)

- Button 2: Connect to GPIO pin 5 and GND (with a pull-down resistor)

- Button 3: Connect to GPIO pin 6 and GND (with a pull-down resistor)

- Button 4: Connect to GPIO pin 13 and GND (with a pull-down resistor)

Circuit diagram is given below:

Working

- Emergency Button (Button 1):

- When pressed, this button triggers an email containing the user’s live location (obtained from the GPS module) to a pre-configured email address. This is crucial for situations where the user needs immediate help.

- Mode Switch Button (Button 2):

- This button toggles between two modes:

- Deaf and Blind Mode: Uses vibro motors to signal directional guidance based on the input from the ultrasonic sensor. If an obstacle is detected on the right, the right motor vibrates, and similarly for the left.

- Blind Mode: Uses a speaker to provide audible instructions. The device speaks directions such as “move left” or “move right” based on the input from the ultrasonic sensor.

- This button toggles between two modes:

- Live Streaming Button (Button 3):

- When pressed, this button initiates live streaming through the camera module and sends an emergency email stating “I am in trouble” along with the streaming link to the designated email address.

- Help Button (Button 4):

- This button also initiates live streaming and sends an email with a specific request, such as “Help me to buy groceries,” along with the streaming link to the given email address.

Code is given below:

import RPi.GPIO as GPIO

import time

import smtplib

from email.mime.text import MIMEText

from picamera import PiCamera

import gps

import subprocess

# Setup

GPIO.setmode(GPIO.BCM)

# Buttons

buttons = [5, 6, 13, 19]

for button in buttons:

GPIO.setup(button, GPIO.IN, pull_up_down=GPIO.PUD_DOWN)

# Vibro Motors

vibro_left = 17

vibro_right = 18

GPIO.setup(vibro_left, GPIO.OUT)

GPIO.setup(vibro_right, GPIO.OUT)

# Ultrasonic Sensor

TRIG = 23

ECHO = 24

GPIO.setup(TRIG, GPIO.OUT)

GPIO.setup(ECHO, GPIO.IN)

# Camera

camera = PiCamera()

# Email Setup

SMTP_SERVER = 'smtp.gmail.com'

SMTP_PORT = 587

EMAIL_USER = 'your_email@gmail.com'

EMAIL_PASSWORD = 'your_password'

TO_EMAIL = 'destination_email@gmail.com'

def send_email(subject, body):

msg = MIMEText(body)

msg['Subject'] = subject

msg['From'] = EMAIL_USER

msg['To'] = TO_EMAIL

server = smtplib.SMTP(SMTP_SERVER, SMTP_PORT)

server.starttls()

server.login(EMAIL_USER, EMAIL_PASSWORD)

server.sendmail(EMAIL_USER, TO_EMAIL, msg.as_string())

server.quit()

def get_location():

session = gps.gps()

session.stream(gps.WATCH_ENABLE | gps.WATCH_NEWSTYLE)

report = session.next()

if report['class'] == 'TPV':

return f"lat: {report.lat}, lon: {report.lon}"

return "Location not found"

def measure_distance():

GPIO.output(TRIG, False)

time.sleep(2)

GPIO.output(TRIG, True)

time.sleep(0.00001)

GPIO.output(TRIG, False)

while GPIO.input(ECHO) == 0:

pulse_start = time.time()

while GPIO.input(ECHO) == 1:

pulse_end = time.time()

pulse_duration = pulse_end - pulse_start

distance = pulse_duration * 17150

distance = round(distance, 2)

return distance

def deaf_blind_mode():

distance = measure_distance()

if distance < 20:

GPIO.output(vibro_left, GPIO.HIGH)

GPIO.output(vibro_right, GPIO.LOW)

elif distance < 50:

GPIO.output(vibro_left, GPIO.LOW)

GPIO.output(vibro_right, GPIO.HIGH)

else:

GPIO.output(vibro_left, GPIO.LOW)

GPIO.output(vibro_right, GPIO.LOW)

def blind_mode():

distance = measure_distance()

if distance < 20:

subprocess.call(['espeak', 'move left'])

elif distance < 50:

subprocess.call(['espeak', 'move right'])

else:

subprocess.call(['espeak', 'all clear'])

def capture_streaming(subject):

camera.start_recording('/home/pi/video.h264')

time.sleep(10)

camera.stop_recording()

send_email(subject, "I am in trouble. Find the live stream here: [URL to your streaming service]")

try:

mode = "blind" # Default mode

while True:

if GPIO.input(4) == GPIO.HIGH:

send_email("Emergency", f"Help me! My location is: {get_location()}")

time.sleep(1)

if GPIO.input(5) == GPIO.HIGH:

mode = "deaf_blind" if mode == "blind" else "blind"

time.sleep(1)

if GPIO.input(6) == GPIO.HIGH:

capture_streaming("Emergency")

time.sleep(1)

if GPIO.input(13) == GPIO.HIGH:

capture_streaming("Help me to buy groceries")

time.sleep(1)

if mode == "deaf_blind":

deaf_blind_mode()

else:

blind_mode()

finally:

GPIO.cleanup()

Notes:

- Replace

"your_email@gmail.com"and"your_password"with your actual email credentials. - The email functionality will work on Windows as it uses standard Python libraries.

gps3is used for GPS functionalities.pyttsx3is used for text-to-speech in place ofespeakon Windows.- The

RPi.GPIOandpicameralibraries are mocked for testing purposes on Windows.

Features

- Emergency Alerts:

- Quick and easy way to send emergency alerts with the user’s live location to a pre-configured email address.

- Mode Switching:

- Allows the user to switch between deaf and blind mode and blind mode, adapting to their specific needs for navigation.

- Haptic Feedback:

- In deaf and blind mode, vibro motors provide tactile feedback to help the user navigate around obstacles.

- Auditory Feedback:

- In blind mode, the device uses a speaker to give verbal directions, helping the user navigate safely.

- Live Streaming:

- The device can initiate live streaming in emergency situations or when specific help is needed, providing real-time video feed to the caregiver or family member.

- User-Friendly Interface:

- Simple button interface makes it easy for the user to interact with the device and trigger the necessary actions.

Application:

Helping with Navigation:

- This project helps people who are blind or have trouble seeing by giving them sensory feedback, like vibrations or audio cues, to navigate their surroundings safely.

Emergency Communication and Location Sharing:

- The device lets users quickly send emergency alerts with their live location to caregivers or emergency contacts via email, which is crucial when immediate help is needed.

Adaptive Navigation Assistance:

- With ultrasonic sensors and vibro motors, the device provides directional feedback to guide users, improving their independence and mobility in different environments.

Multi-Mode Functionality:

- The device has two modes: one for deaf and blind individuals and one for blind individuals. Users can choose between vibration or speech feedback for navigation, based on their needs.

Live Streaming for Remote Assistance:

- The device can stream live video and audio through its camera, allowing users to get real-time help from caregivers or family members during emergencies or for daily tasks like shopping.

Healthcare and Elderly Care:

- This project can also be used in healthcare and elderly care settings to monitor and assist patients or elderly individuals who may need supervision or emergency support.